The question above posed by polymath Tyler Cowen in this episode, is the core of his conversation with host Russ Roberts. Roberts begins by asking Cowen what he thinks the biggest impacts Artificial Intelligence will have on the economy and elsewhere. The conversation covers the possibility of intelligence and/or wisdom in AI, and its potential “cognitive oomph.” (That’s a technical term, of course.)

It’s always a treat to sit in on a conversation between these two. And once you’ve done so, we hope you’ll consider the prompts below. As you know, we ♥ to hear from you!

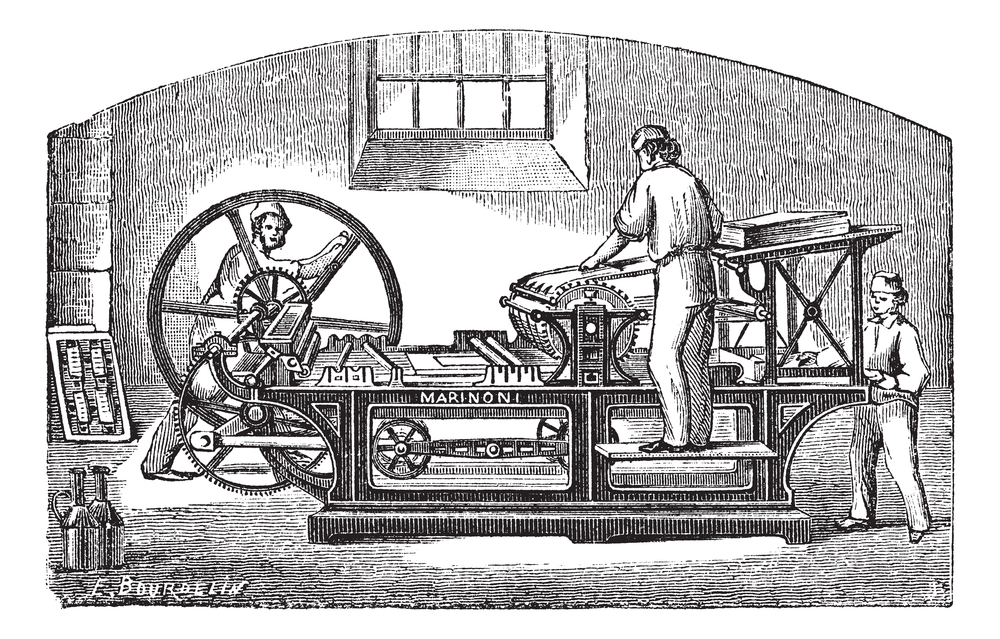

1- Cowen suggests that the closest historical analogs to AI are the printing press and electricity, stressing that major technological advances tend to be disruptive. But with great disruption, they often bring highly significant benefits. The question is, according to Cowen, how do you face up to them?

Are we willing to tolerate major disruptions which have benefits much higher than costs, but the costs can be fairly high? What other historical analogs can you suggest that help shed light on the trade-offs we may anticipate with widespread adoption of AI? What sort of disruptions do you think AI will bring to bear, and how much should be tolerated, and why?

2- Roberts asks Cowen where he sees the greatest impact of AI now. Cowen suggests it can already serve as an “incredible interactive tutor,” and that it will soon eliminate a great deal of back office work. Roberts and Cowen agree that the impact on coding is already significant, and then Roberts turns to poetry.

Where do you see the greatest impact short-term effects of AI? To what extent do you agree with the suggestions offered by Cowen and Roberts? Would you let ChatGPT write a condolence note? What about a love poem? Why or why not?

3- Cowen asserts that alongside AI, how you behave in real life will become even more important. So back to poetry. Recall the story of Cyrano de Bergerac. How does this illustrate the need for transparency, and what does this suggest about real-life versus virtual engagement in things like dating apps? The classroom? Employment situations?

4- Roberts asks Cowen if there anything he would regulate or slow down with regard to AI. Cowen continually insists that it’s not whether to regulate, but who should regulate and how. It’s about decentralization, he says- ensuring proper checks and balances and mobilizing decentralized knowledge.

What does Cowen mean when he says that Hayek and Polanyi should be at the center of the debate, rather than Arrow and Bentham? What role does he see for social norms in constraining AI? What role do you see for regulation and/or social norms? If there’s such an emphasis on decentralization, why is Cowen so adamant that we need to employ scientific modeling in the conversation?

5- Unlike several other recent guests, Cowen is much more optimistic about the future of AI, but not that it doesn’t come without risk. He says, “There are these very serious risks, but there’s also a Millenarian tendency in human thought that tends to rise in volatile times. And, we have to think about how to deal with those Millenarian tendencies. They are themselves a form of risk: that our reaction to an event can be worse than the event itself, even if the event involves some very high costs.” What does this mean? Which do you think is more dangerous- AI itself, or the doomsday predictions it inspires? Explain.

Bonus Question: Says Cowen, “One of my predictions is that GPT models will raise the relative–and indeed–absolute wages of carpenters.” Why might this be the case? Double Bonus if you use supply and demand graphs in your explanation!

READER COMMENTS

Walter Donway

Jul 20 2023 at 11:31pm

It is getting a bit late in the evening, so this will be brief. But the topic of A.I. and A.G.I.–and the alleged “existential threat” they present–has captured my attention. How could it fail to do so with stories in the NYT every day, actually several stories, long articles in the Magazine Sundays, opinion pieces. But, here, just one perhaps fanciful observation.

What is the best analog to A.I.? Electricity? Printing. Good candidates.

Well, if the analog need not be technology, however, then perhaps we should nominate the invention of government. Smiley face here, if you wish. But observe how by the eighteenth century Age of Enlightenment intellectuals were obsessively devoting whole books, endless debates, and experiments addressed to the issue: How do we control government? How do we keep it out of religion? How do we prevent it from launching endless catastrophic wars? How do control the money the king and the courtiers lavish on themselves?

And in America, the Founding Fathers had really few questions about government more urgent than: How to make it work FOR US? How to avoid it’s taking over? Turning against us?

How to avoid seeming instrumental perils such as factions, control of our speech and press, onerous taxation, the way that power leads to more power and to more power? How do we keep this invention from falling into the wrong hands? When must we actually fight it, tear it down–and will we be strong enough to do so?

And then a great, idealistic, really beneficial plan for finally making government work for us was launched in France. And did that iteration of the invention remain and control and serve its creators?

And I would suggest to you that a new iteration of the invention of government in Nazi Germany and Stalinist Russia (and how soon the PRC?) were genuine, real-life existential threats? Actually, existential catastrophes. Do you get more existential than was Nazi Germany domestically and then at war?

All because we invented government as a necessary benefit and never figured out how to control it? And still have not.

Jeez, perhaps A.I.G., like “catastrophic climate change,” is just a diversionary “existential threat” to keep us from dealing with our government. Mr. Biden says we gotta get A.I. under control for the good of “the people.”

Amy Willis

Jul 27 2023 at 10:51am

Walter, That’s a GREAT analogy. One might say the emergence of society predating the dawn of government. Maybe that stretches it too far?

I definitely like your inherent challenge of choosing an analogy not rooted in technology. I might similarly nominate education…

Comments are closed.